Chapter V: Angry Reacts Only – Harvesting Cash from the Media Ecology

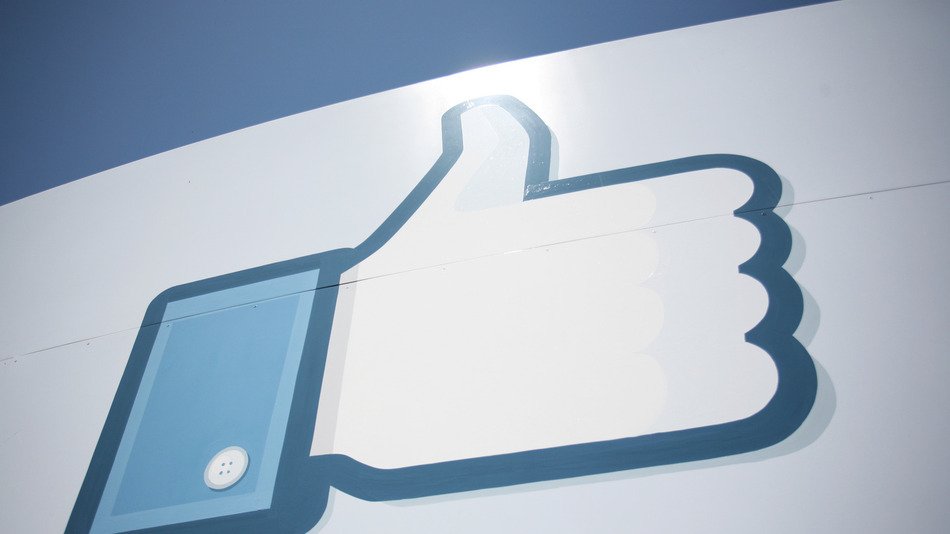

The "old" Facebook like button, as it appeared in 2009.

The "old" Facebook like button, as it appeared in 2009.

“Researchers have found, for example, that the algorithms running social media platforms tend to show pictures of ex-lovers having fun. No, users don’t want to see such images. But, through trial and error, algorithms have discovered showing pictures of our exes having fun increases our engagement. We are drawn to click on those pictures and see what our exes are up to, and we’re more likely to do it if we’re jealous they’ve found a new partner. The algorithms don’t know why it works, and they don’t care. They’re only maximise whatever metric we’ve instructed them to pursue.” – Douglas Rushkoff, Team Human

In September 1993, Global Network Navigator (GNN), now a division of O’Reilly Media, sold the first ever clickable advertisement on the World Wide Web to a law firm based in Silicon Valley. By the late 1990s, during the dot com boom, internet advertising in the form of clickable icons or Graphics Interchange Files (GIF) that flashed enticements across the screen were ubiquitous.

Advertising alongside search engine results pages (SERPs) became the standard in October of 2000, when Google launched its AdWords service. Companies and brands would pay for sponsored links that appear at the top of search results, bidding for the top spot using automated algorithms. When a browser clicked on the ad, the company paid for the privilege – what’s known as a “Pay Per Click” advertisement (PPC.)

Advertising wares and services on a PPC basis might connect customers to products they may want or need, but this was hurting the revenue of the previous arbiter of advertising and commerce – newspapers and magazines. Newspapers could no longer rely on revenue from advertisers since many of them found cheaper and more effective alternatives online.

According to the Pew Research Centre, advertising revenue topped $49 billion (US) in 2004 – it now sits at about $18 billion US for all newspapers combined in the United States. By contrast, Google’s $110.8 billion in revenue derives mostly from Google AdWords, now Google Ads.

Newspapers had to adapt to the medium but also the media ecology at large. Newspapers that migrated online – and digital only start-ups alongside them – needed to realise that providing nuanced, balanced reporting was not the way of the future. To make real money, they needed people to get search engine results. Calls to action, not calls to thought.

The people reading their content needed to be as digital as the machines that host it.

All Positions Contested

The week through August 28th, 2014, major video games publications including The Escapist, Gamasutra, Kotaku, The Daily Beast, Vice, Destructoid, and even conventional masthead The Guardian proclaimed the identity or fandom of video gaming was toxic and rooted in a culture of homophobia, sexism, and racism. Appearing like a coordinated attack on the current state of gaming culture, this led to a small yet significant backlash against the games press known as #GamerGate, a hashtag calling for a restoration of ethical standards in video games journalism.

Proponents of #GamerGate were lambasted for conducting a relentless harassment campaign against critics of gamer culture, notably feminist and left-wing critics such as independent game developer Zoe Quinn; fellow developer Brianna Wu; and Anita Sarkeesian, host, and founder of not-for-profit feminist cultural studies organisation Feminist Frequency.

At the time, feminist writer Jessica Valenti commented, "the movement's much-mocked mantra, 'It's about ethics in journalism'" was seen by others as "a natural extension of sexist harassment and the fear of female encroachment on a traditionally male space." Sarkeesian and her adherents still contend that sexist “tropes” or elements in video games contribute to real world attitudes against women; though media theorists have long debunked notions that players of video games are more predisposed to violence, call it a “moral panic” in the wake of mass shootings.

In mid-September of that year, provocateur and then-Breitbart editor Milo Yiannopoulos published discussions from a closed mailing list known as GameJournoPros, which detailed the coordinated publishing of op-eds declaring “end of gamers”, mockery of certain developers, and presented this as evidence of collusion in the gaming press.

Much like the President Bush declaration – “You are with us or you are with the terrorists,” the video games press declared you are either a toxic “gamer”, or part of the new vanguard of “woke” identitarian games consumption, which favoured left-wing ideological bias in place of “conservative” ideas. The culture war sorted itself into binary groups, choosing a digital battlefield and leading a charge to preserve or change a purely digital media ecology.

Both sides were using the medium as mass-surveillance to eke out their fronts, fire salvos in each other’s direction, and gin up support for their cause. Fitting that their respective “causes” were video games, as social media uses gamification to ensure people use it and keep using it; “rewards and incentive systems determine usage,” as Israeli-Macedonian psychologist Sam Vaknin describes it.

140 Characters Hate

Vaknin says that the truncated nature of communication in social networks is more conducive to hateful responses; it only takes a few words to express hatred, e.g., “go to hell! Fuck you!” Where as love and compassion would require many more words to convey: perhaps an order of magnitude larger, such as a letter or a “real world” gesture lest it feel insincere.

Orwell in his prescient “two minutes hate” – a spontaneous eruption of malice toward Party enemies in Oceania – may mirror this assessment. A continuous “twenty minutes” hate would lose steam before long; it could even give rise to the realisation their hate is manufactured, and they are indeed being manipulated.

Vaknin also describes that the social media/mass surveillance circuit is built on ambiguity, the fear of the other. “The only way to disambiguate something is to get to know it,” he says. Intimacy, he also says, reduces the need for addiction and dependency.

Former Facebook engineers have admitted that the medium was built around “continuous partial attention,” as Justin Rosenstein – inventor of the “like” button - described to The Guardian. Nir Eyal, author of Hooked: How to Build Habit-Forming Product says mass surveillance media exploits “feelings of boredom, loneliness, frustration, confusion and indecisiveness often instigate a slight pain or irritation and prompt an almost instantaneous and often mindless action to quell the negative sensation.”

Rolled out in February 2009, replacing the star rating and “awesome” button, the “like” button gives users a temporary and fleeting serotonin boost – the neurotransmitter responsible for reward pathways – and allows Facebook and partners to track user behaviour and preferences for later advertising and remarketing efforts. The like button is also ambiguous; does “liking” a post that describes a user’s misfortune mean they are revelling in their misery? Providing sympathy? Simply showing they have read and understood the message?

This ambiguity paired with gamification gives rise to a media ecology that conditions users to stay on the system. Ambiguity is also biased towards amygdala, “lizard brain” reactions of hatred, upset, outrage, and terror. More nuanced or reasoned content would be of no value to the medium, as it encourages neocortical, higher-brain reasoning and analysis. It also requires full, instead of partial, attention. As mentioned earlier, a sustained “twenty minutes hate” would not work as the hatred would (presumably) give way to self-reflection.

Vaknin says that suicide rates among youth in the last decade have jumped 31%, thanks to social media’s bias toward anxiety and depression-causing content. Harvesting the cash from this media ecology is achieved through constant “reward” activation and treating real human intimacy as a threat.

It’s working – as Facebook generated $55 billion in advertising revenue in 2018. That’s $37 billion more than the intimate, unambiguous, nuanced, and reasoned reporting from all US newspapers combined.

If one’s life is mediated through Facebook and other mass surveillance media, the conditioning to be harvested for cash is almost limitless.